This project explores how one can use image-based lighting to synthetically insert a 3D object into a scene. One way to relight an object is to capture an 360 degree panoramic (omnidirectional) HDR photograph of a scene, which provides lighting information from all angles incident to the camera (hence the term image-based lighting). This is accomplished using a spherical mirror in this project. A normal camera cannot fully capture the relative scale of a scene's lighting due to the limitations of floating point numbers. This project implements three different approaches in creating an HDR image that can accuarately capture the scale of a scene's lighting. After merging the captured LDR images into an HDR image, we can then create and use an environment map to render a 3D object into the original scene using Blender. The LDR images were captured using exposure times of 1/16, 1/64, and 1/256 seconds.

The first and simplest approach is to standardize each LDR as if it had been exposed for one second. This is accomplished by dividing each images by its respective exposure time. The images can be merged into an LDR image by simply averaging each image over the rgb channels. Although simple and easy to implement, the outleirs can easily dominate when taking the average.

The only difference between naive LDR merging and weighted LDR merging is how the average is taken. In this approach, higher weights are assigned to medium values and lower weights are assigned to outliers. This approach tries to solve the issue from naive LDR merging but clearly, again, we are not able to capture the full range of the image.

In practice, intensity is a non-linear function of exposure both due to clipping and due to the camera response function, which for most cameras compresses very dark and very bright exposure readings into a smaller range of intensity values. To convert pixel values to true radiance values, we need to estimate this response function. This paper outlines how to accomplish this, although the implementation of the response function was provided to me. This approach clearly produces the best results.

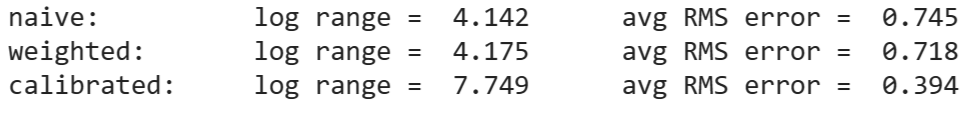

The following figure shows the log range and RMS error. Clearly, the range went up and RMS error went down for each progressive method, with the calibrated approach performing the best.

In order to use blender and synthetically insert a 3D object into a scene, we need to create a panoramic environment map. To perform the transformation, we need to figure out the mapping between the mirrored sphere domain and the equirectangular domain. We can calculate the normals of the sphere (N) and assume the viewing direction (V) is constant. We then calculate reflection vectors with R = V - 2 * dot(V,N) * N, which is the direction that light is incoming from the world to the camera after bouncing off the sphere. The reflection vectors can then be converted to spherical coordinates by providing the latitude and longitude (phi and theta) of the given pixel (fixing the distance to the origin, r, to be 1). The code for converting reflection vectors to the equirectangular domain was provided to me.

Using Blender and the environment map from the previous section, we can now render a 3D object into a scene. We will create an object mask and apply M*R + (1-M)*I + (1-M)*(R-E)*c to get the final composite. In the equation, let R be the rendered image with objects, E be the rendered image without objects, M be the object mask, and I be the background image. C is a constant that changes the shadow effect.

Background Image

Rendering Without Objects

Rendering with Objects

Final Composited Image