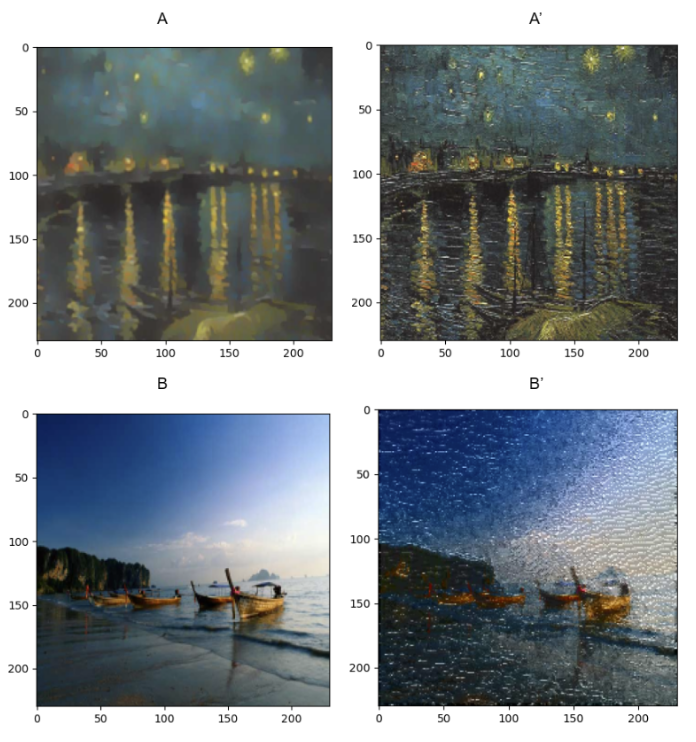

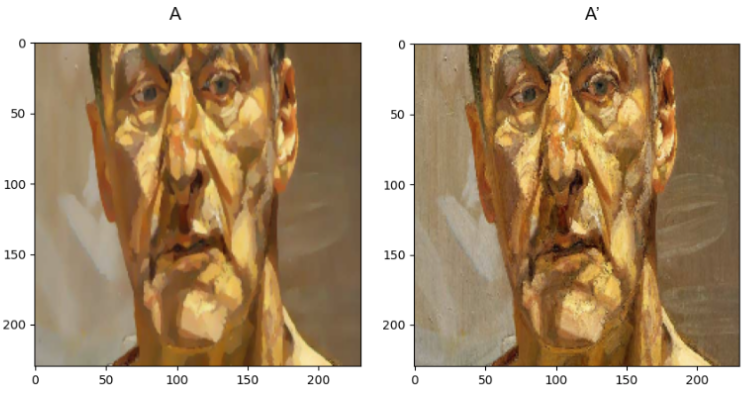

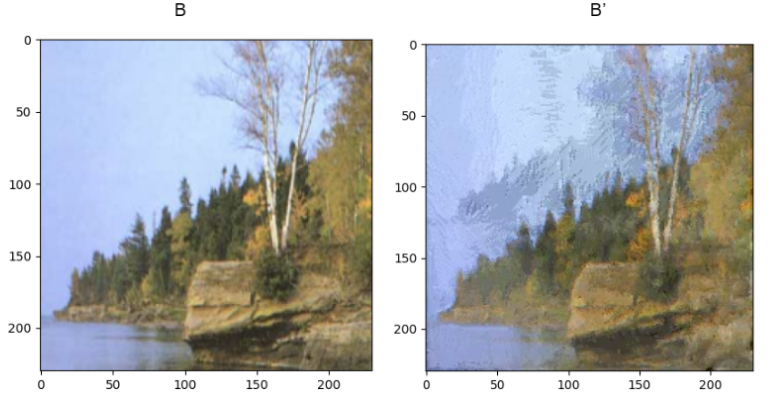

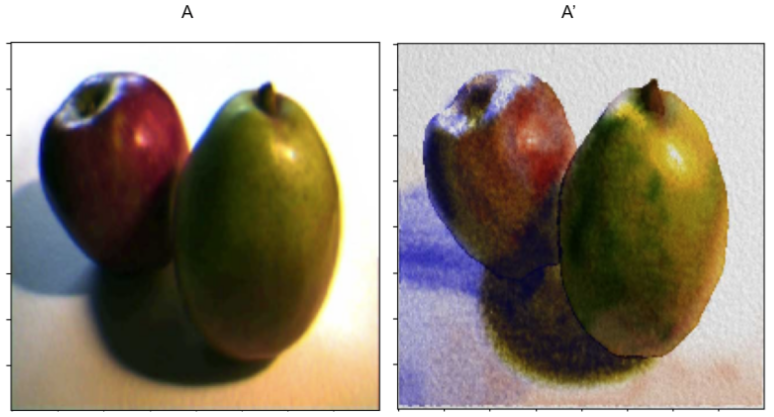

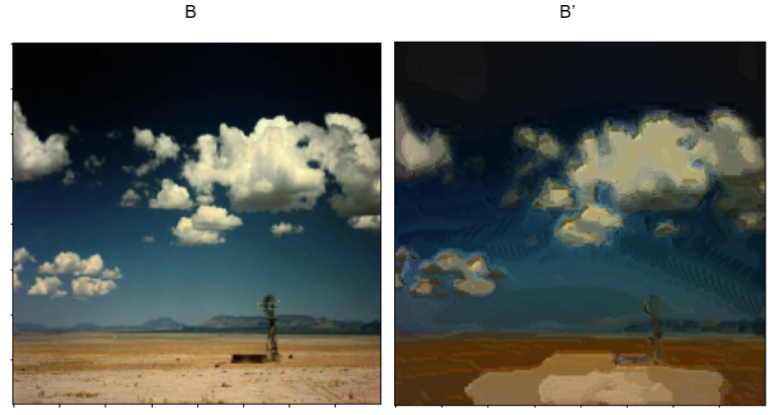

Image Analogies allows us to model any filter, linear or nonlinear, without knowing how the filter is applied. All we need is three input images -- an unfiltered-filtered pair, and a third image that the filter will be applied to. These three images are modeled by A, A', and B in the figure to the right, respectively. Put more concisely, we want to return an image B' that is analagous to the image A'. This project and implementation were based off of the research paper by Hertzman et. al., and can be found at this link .

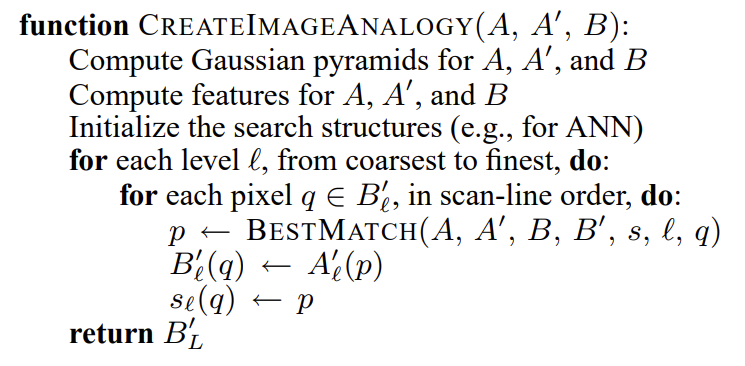

To achieve our results in image analogies, we first have to set up the gaussian pyramids of the luminance channel for three images, A, A’ and B. In each level of the gaussian pyramid, we iterate through each pixel of an empty B’, the final output image, and determine the best pixel to fill in. The pseudocode for the CreateImageAnalogy method is shown below.

We used two separate functions to determine the best pixel. The first is BestCoherenceMatch, where we use neighborhood matching to find the best matching pixel from A. Neighborhood matching works by taking into account the already synthesized pixels of B’, the corresponding pixels of A’, a 5 x 5 neighborhood in A and B, and a 3x3 neighborhood in the coarser levels of A, A’, B, and B’. We decided to use the luminance channel for our feature vectors because it allowed for a speedup in finding B’, since we have to store one third of the channels. The second is BestApproximateMatch, where we used an approximate-nearest-neighbor (ANN) search to determine the closest pixel in the current level of the gaussian pyramid. In our implementation, we chose to use a KDtree from the sci-kit learn library to query through the feature vectors and find our approximate pixel. Once the BestApproximateMatch and BestCoherenceMatch returned results, we compared the error for each pixel and chose the minimum of the two. Pseudocode Shown Below.

After looping through every level, we then took the luminance channel from the finest level of the pyramid and copied over the I and Q channels from the original B image. This works since our eyes are more sensitive to luminance channel changes than to color difference channels changes.

We had to make some design choices where the paper was vague. Initially instead of doing a brute

force choice at the coarsest level and border of the image, we passed in an expected result from

the paper to fill in pixels at the border. We also didn’t loop through the coarsest image in

CreateImageAnalogy. This allowed for faster runtimes and to produce more results, although we

finally managed to implement the brute force search as well which makes the method independent

of the expected result. All our results are using this method.

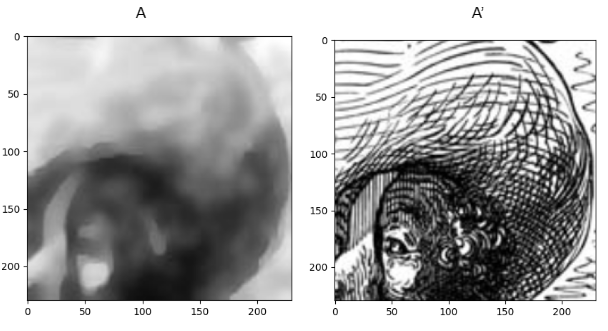

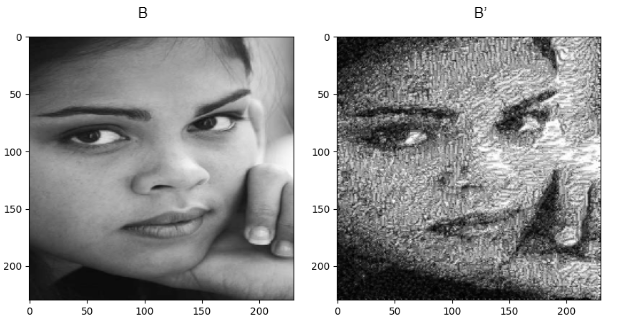

Example results for an engraving filter:

Example results for a Lucian Freud filter:

Example result for a water color filter:

Our main motivation for completing image analogies was to be able to mimic any artists' style and produce images that look like they had been painted by them. Although, we were able to capture texture with the use of image analogies, we weren't able to capture color. Hence, we took two other group members' implementation of Color Transfer between Images (Reinhard et al.) and were able to reproduce the following image of boats that captures the color and texture of a Vincent Van Gogh painting.

Original Images:

Outputted image with mimicked texture and color: